Don’t Lag on Understanding Latency

By Chris Bastian

By Chris Bastian

SVP, Engineering/CTO, SCTE-ISBE

Network operators have built their networks for speed and continue to make significant investments to stay ahead of the speed demand curve. This is driven by both the demands of new services and applications, as well as a healthy dose of marketing and competition. Hardly a commercial break goes by in which wireline and wireless network operators aren’t touting their “fastest” speeds. While speed will continue to be a major driver and differentiator of superior networks, lately another variable has been getting a good deal of attention: lower latency.

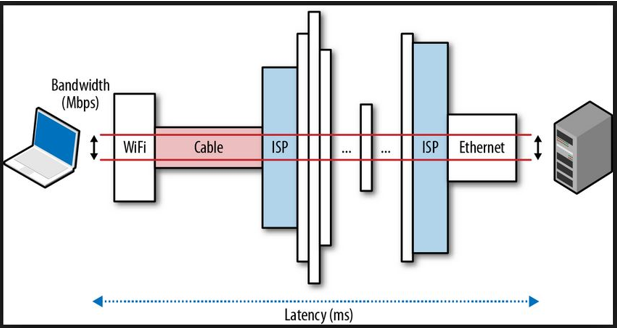

How is latency defined? It is the time it takes a data packet to get from point A to point B, usually measured in milliseconds. When comparing latency times, it is important to keep it mind where points A and B are located. Is the measurement made from just the border/demarc of the network operator, or does it include the home WiFi segment, the customer’s premise equipment, and/or the server delay of the application? And is it one-way or round-trip latency (a misunderstanding which may lead to doubling the latency figure you thought you had). Ookla, a major online speed and latency test company, suggests that the following may affect your latency results:

- Radio capabilities (WiFi and cellular) of your device;

- What test server you are connecting to; and

- What service or browser you use to launch the latency test.

It is important to note that latency does not stay constant over time. Applications that you are using, as well as other network traffic between the cable operator segment and the service provider segment, will cause latency to vary throughout the day.

Source: https://hpbn.co

In the past, service application developers looked at the most common latency times their customers were experiencing and designed their application experiences with that ”lowest common denominator” in mind. Today, new and emerging applications can’t afford to “build in” this expected delay. On-line gamers are most often cited as looking for the lowest network latency, as whether they win or lose heavily depends on this click response (e.g. first-person shooter games). Beyond gaming, applications supporting financial, telemedicine and autonomous vehicle services require this latency difference a few milliseconds can make.

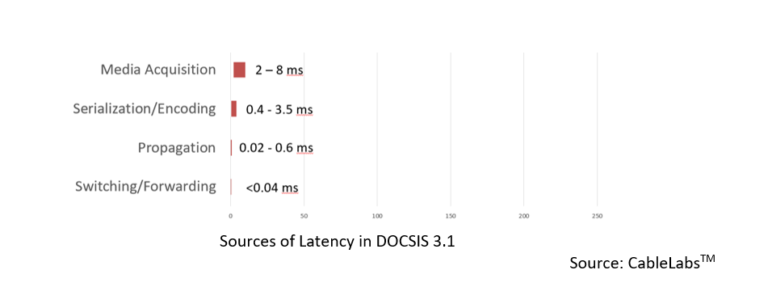

There are several industry initiatives underway focusing on minimizing network latency, including in the DOCSIS® and WiFi access networks. In both of these network segments, queueing is the biggest contributor to latency. At the recent Cable Tec Expo in New Orleans, Greg White from CableLabs™ presented Low Latency DOCSIS – Overview and Performance Characteristics, including the chart below, showing how significant queueing is to latency.

To address this queueing challenge, a team at CableLabs® is working on Low Latency DOCSIS. The technology under development is using a combination of:

- A separate low-latency service flow/queue;

- Queue protection which constantly monitors whether the low latency queue is “non-queue building;”

- Congestion control using a technique called “active queue management” (AQM); and

- Upstream scheduling which supports both a faster grant/loop cycle, as well as a proactive grant service (PGS) intended for ultra-low latency services.

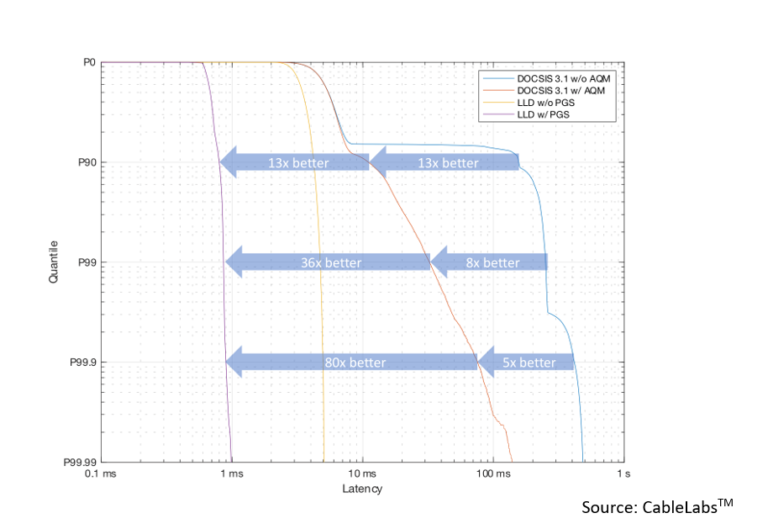

With regard to the variability of latency, the combination of the above techniques is achieving latency results in the single milliseconds, for 99 percent of packets. The below chart, based on test simulations conducted at CableLabs, shows this variability for four scenarios:

- DOCSIS 3.1 without active queue management

- DOCSIS 3.1 with active queue management

- Low latency DOCSIS without proactive grant service

- Low latency DOCSIS with proactive grant service

As can be observed, Low Latency DOCSIS with proactive grant service is demonstrating 1ms latency for the 99th percentile.

In order to progress these latency advances into production, CMTS support will be needed for the downstream features, and both CMTS and cable modem support will be needed for the upstream features.

Low latency is one of the fundamental features of 10G networks, along with greater speeds and capacities, as well as greater security and higher reliability. (To read more about 10G networks, check out www.10gplatform.com.) It is encouraging to see that once again, DOCSIS is evolving to address the service demands that are so central to our customer’s lives.